Exercise may actually be bad for you! A professor says he stumbled upon this "potentially explosive" insight. The New York Times has been quick to peddle it. And couch potatoes descend on it like vultures on road kill. But professors can get it wrong, too.

Before we judge the verity of the "exercise may be bad" claim, let's first look at how the media present it to us. We shall use the recent article in The New York Times, headlined "For Some, Exercise May Increase Heart Risk". The first paragraph confronts us with a journalist's preferred procedure for feeding us contentious scientific claims: presenting an authoritative author with stellar academic credentials and a publication list longer than your arm. While that is certainly better than having, say, Paris Hilton as the source of scientific insights, it is a far cry from actually investigating such claims. Which is what we want to do now.

The basis of the exercise-may-be-bad claim is a study which investigated the question "whether there are people who experience adverse changes in cardiovascular risk factors" in response to exercise [1]. The chosen risk factors in question were some of the usual suspects: systolic blood pressure, HDL-cholesterol, triglycerides and insulin. The research question: Are there people whose risk factors actually get worse when they change from sedentary to more active lifestyles?

Sounds simple enough to investigate. Put a group of couch potatoes on a work-out program for a couple of weeks and see how their risk factors change. Only it is not that simple. In the realm of biomedicine, every measurement of every biomarker is subject to (a) errors in measurement and (b) other sources of variability. This makes it virtually impossible for you to see exactly the same results on your lab report for, say, blood pressure, cholesterol, glucose or any other parameter, when you get them measured two or more days in a row. Even if you were to eat exactly the same food every day and to perform exactly the same activities.

Now imagine, if you conducted an intervention study on your couch-potato subjects and you found their risk factors changed after a couple of weeks of doing exercise, you could theoretically be seeing nothing else but a random variation caused by the error inherent in such measurement.

To avoid falsely interpreting such a variation as a change into one or the other direction, it makes good sense to know the bandwidth of these errors for each biomarker, before you embark on interpreting the results of your study. Which is what the authors of this particular study did. They took 60 people and measured their risk factors three times over three weeks. From these measurements they were able to calculate the margin of error. Actually, they didn't do this for this particular paper, they had done this measurement as an ancillary study in the HERITAGE study performed earlier. The HERITAGE study had investigated the effects of a 20-weeks endurance training program on various risk factors in previously sedentary adults. Whether heritability plays a role in this response was a key question. That's why this study recruited entire families, that is, parents up to the age of 65, together with their adult children.

I mention this because the paper, which we are deciphering now, is a re-hash of the HERITAGE study's results, to which the authors added the data of another 5 exercise studies. That's what is called a meta-analysis. In this case it covers more than 1600 people, with the HERITAGE study delivering almost half of them.

Fast forward to answering the question of how many of those participants had experienced a worsening of at least 1 risk factor. Close to 10%. That is, about 10% of the participants had an adverse change of a risk factor in excess of the margin of error, which I mentioned earlier. I'm going to demonstrate the results, using systolic blood pressure and the Heritage study as the example. I do this exemplification for three reasons: First, blood pressure is the more serious of the investigated risk factors. Secondly, the HERITAGE study delivers most of the participants, and thirdly, the effects seen and discussed with respect to blood pressure and HERITAGE apply similarly to the other 5 studies and risk factors.

But before we go there I need to familiarize you with a basic concept of statistics. It is called the "normal distribution of data". It is an amazing observation of how data are distributed when you take many measurements. Let's take blood pressure as an example.

If you were to measure the blood pressure values for every individual living in your village, city or country, you could easily calculate the average blood pressure for this group of people. You could put all those data into a chart such as the one in figure 1.

|

| Figure 1 |

On the x-axis, the horizontal axis, you write down the blood pressure values, and on the y-axis (the vertical axis) you write down the number of observations, that is, how often a particular blood pressure reading has been observed. You will find that most people have a blood pressure value pretty close to the average. Fewer people will have values, which lie further away from this average, and very few people will have extreme deviations from the average.

It so turns out, that when you map almost any naturally occurring value, be it blood pressure, IQ or the number of hangovers over the past 12 months, the curve, which you get from connecting all the data points in your graph, will look very similar in shape. Some curves are a bit flatter and broader, while others are a bit steeper and narrower. But the underlying shape is called the "normal distribution", and it means just that: It's how data are normally distributed over a range of possible values. The curve's shape being reminiscent of a bell, has lead to this curve being called the "bell curve".

In statistics, especially when we use them to interpret study data, we always go through quite some effort to ensure that the data we measure are normally distributed. That's because many statistic tools don't give us reliable answers if the distribution is not normal.

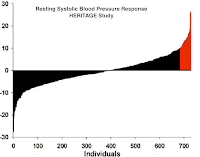

Back to our famous study. What you see in figure 2 is how the authors present their results for the blood pressure response of the HERITAGE participants.

|

| Figure 2 |

For each individual (x-axis) they drew a thin bar representing the height of that person's change in blood pressure after 20 weeks of exercise. Bars extending below the x-axis represent reduced blood pressure, and those extending above the x-axis represent increased blood pressure. The bars in red are those of the people whose blood pressure increase was in excess of the error margin of about 8mmHg.

Now, Claude Bouchard, the lead author of the paper, is being quoted in the NYT as saying that the counterintuitive observation of exercise causing systolic blood pressure to worsen "is bizarre".

Here is why it is neither counterintuitive nor bizarre: When we accept the blood pressure values of our study population to be distributed normally, we have every reason to expect the change in blood pressure to be distributed normally, too. Specifically, since all participants went through the same type of intervention.

|

| Figure 3 |

If we now run a computer simulation, using the same number of people, the same mean change in blood pressure, and the same error values, then we can construct a curve for this group, too. Which is what you see in figure 3. Eerily similar to the one in figure 2, isn't' it?

That's because we are looking at a normal distribution of the biomarker called 'blood pressure change'. It is an inevitable fact of nature that a few of our participants will change "for the worse". And I'm putting this in inverted comma because we don't really know whether this change is for the worse.

After all, we are talking risk factors, not actual disease events. In the context of this study you need to keep in mind, that all participants had normal blood pressure values to begin with. The average was about 120mmHg. The mean change was reported as 0.2 mmHg. That's not only clinically insignificant, that's way below the measurement capability of clinical devices.

When I started to dig deeper into this study, I found quite a number of inconsistencies with earlier publications. For example, in the latest paper, the one discussed in the NYT, the number of HERITAGE participants was stated as 723. In a 2001 paper, which investigated participants' blood pressure change at a 50-Watt work rate, the number was stated as 503 [2]. In the same year Bouchard had published a paper putting this number at 723 [3]. Anyway, the observation that the blood pressure change during exercise was significantly larger (about -8 mmHg) than the marginal change of resting blood pressure indicates that there probably was some effect of exercise.

So, what's the take-home point of all this? With the "normal distribution" being a natural phenomenon that underlies so many biomarkers, it is neither bizarre nor in any other way astonishing to find "adverse" reactions in everything from pharmaceutical to behavioral interventions and treatments. Whether such reactions are truly adverse can't be answered by a study like the one, which is now bandied about in the media. That's because risk factors are not disease endpoints. They are actually very poor predictors of the latter, as I have explained in my post "Why Risk Factors For Heart Attack Really Suck".

So, keep in mind, that there is no treatment or intervention, which has the same effect on everybody. Pharmaceutical research uses this knowledge, for example, when determining the toxicity of a substance. This toxicity is often defined as the LD50 value, that is, the lethal dose, which kills 50% of the experimental animals. Meaning, the same dose which kills half the animals, leaves the other half alive and kicking.

And correspondingly, the same dose of exercise, which cures your neighbor from hypertension, may have no effect on you. Because you belong to those 10% who react differently. But are these 10 good reasons not to exercise? How to deal with this question will be the subject of my next post. Until then, stay skeptical.

1. Bouchard, C., et al., Adverse Metabolic Response to Regular Exercise: Is It a Rare or Common Occurrence? PLoS ONE, 2012. 7(5): p. e37887.

2. Wilmore, J.H., et al., Heart rate and blood pressure changes with endurance training: the HERITAGE Family Study. Medicine and Science in Sports and Exercise, 2001. 33(1): p. 107-16.

3. BOUCHARD, C. and T. RANKINEN, Individual differences in response to regular physical activity. Medicine and Science in Sports and Exercise, 2001. 33(6): p. S446-S451.

Bouchard C, Blair SN, Church TS, Earnest CP, Hagberg JM, Häkkinen K, Jenkins NT, Karavirta L, Kraus WE, Leon AS, Rao DC, Sarzynski MA, Skinner JS, Slentz CA, & Rankinen T (2012). Adverse metabolic response to regular exercise: is it a rare or common occurrence? PloS one, 7 (5) PMID: 22666405

Wilmore, J. H., Stanforth, P. R., Gagnon, J., Rice, T., Mandel, S., Leon, A. S., Rao, D. C., Skinner, J. S., & Bouchard, C. (2001). Heart rate and blood pressure changes with endurance training: the HERITAGE family study. Medicine and Science in Sports and Exercise DOI: 10.1097/00005768-200101000-00017

Bouchard, C., & Rankinen, T. (2001). Individual differences in response to regular physical activity Med Sci Sports Exerc DOI: 10.1097/00005768-200106001-00013

Andrew Gelman raised the same issues here on his statistical modeling blog: http://andrewgelman.com/2012/06/massive-confusion-about-a-study-that-purports-to-show-that-exercise-may-increase-heart-risk/.

ReplyDeleteThe really good stuff was in the comments. Here is one that is particularly acute: "You don’t even need variable treatment effects. The first claim (10% drop by 2 SDs on 1/6) is totally unsurprising if the changes in biomarkers are iid normal (the null expectation is about 12%). The second claim (7% on 2/6) is surprising if they are iid, but not if some of them are highly correlated (they are) or if the normality assumption is bad (glancing at the data, I think it is)."

Thanks for this enlightening link, Phil. Nice to see that I'm not the only one taking issue with this.

DeleteC. Ryan King's comment on the correlation is spot on: TG and HDL are definitely correlated. And his suspicion that the normality assumption is bad is justified, since participants had been recruited predominantly as families of 5.

What always amazes me, is how some fluff papers make it through the peer review process, when lesser "credentialed" authors struggle to get good content published.

This is very interesting data.

ReplyDeleteI am always surpriced that we can get so contrary indications from different scientists.

hmmm

ReplyDeletevery interesting post..